Evaluating current efforts to move science education forward,

such as that framed by the Next Generation Science Standards, requires

“assessments that are significantly different from those in current use” (National Academies report, Developing Assessments for the Next

Generation Science Standards, 2014). Performance tasks in particular

offer significant insights into what students know and are able to do. “Through

the use of rubrics [for such tasks] … students can receive feedback” that

provides them a “much better idea of what they can do differently next time” (Conley

and Darling-Hammond, 2013). Learning is enhanced! Building from a vision of

the skills and knowledge of a science literate student, rubrics can allow

students (and other educators) to see a clearer path toward that literacy.

Of course, using rubrics with performance tasks is generally

a more time-intensive process than creating a multiple-choice or

fill-in-the-blank exam. In order to be used as part of a standardized testing

system or as reliable common assessments, scoring these types of tasks requires

more technical considerations:

- Tasks must include a clear idea for what proficient and non-proficiency looks like;

- Scoring must involve multiple scorers who all have a clear understanding of the criteria in the rubric; and,

- Designers need to develop clear rubric descriptors and gather multiple student anchor responses at each level for reference.

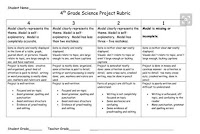

While this example comes from a 4th grade

classroom, secondary rubrics often have similar characteristics.

Considering alignment to the NGSS, and effective rubric

qualities in general, there are several changes I’d make:

- What’s the science learning involved? They appear to be drawing or making a model. About what? What understanding would a proficient model of that phenomenon display?

- What would mistakes look like in a model? I’m a little worried that the “model” here is just memorizing and recreating a diagram from another source. Students’ models should look different. There might be a mistake in not including a key element of a model to describe the phenomenon, or not noting a relationship between two of those elements. Types of mistakes indicating students aren’t proficient should be detailed.

- Neatness and organization are important, but I question the use of those terms as their own category. I would connect that idea to the practice of scientific communication. Does the student clearly and accurately communicate his or her ideas? Do they provide the necessary personal or research evidence to support their ideas? The same is true within the data category. I’m more concerned about whether the students can display the data accurately and explain what the data means, than whether they use pen, markers, and rulers to make their graphs…

- It’s not a bad idea to connect to English Language Arts (ELA) standards—done here with the “project well-written” category. At the elementary level in particular that makes sense; however, I’d want to ensure that I’m connecting to more, actual ELA standards, such as the CCSS ELA anchor standards for writing, which emphasize ideas like using relevant and sufficient evidence. At upper grades, I’d also want to emphasize disciplinary literacy in science (e.g., how do scientists write?) over general literacy skills.

- This rubric actually does better than many at focusing on student capacity rather than behaviors. I see many rubrics that score responsibility and on-task time, rather than scientific skills and understanding. Check out Rick Wormeli’s ideas.

- What does “somewhat” really suggest? Is the different between two mistakes and three mistakes really a critical learning boundary? I see a lot of rubrics that substitute always, sometimes, and never for a true progression of what students should know and be able to do. I also see many rubrics that differentiate rankings by saying things like no more than two errors, three to five, errors, more than five errors. What do we really know from that? What types of errors are made? Is it the same error multiple times? What exactly can’t the student do in one proficiency category vs. the next? I really can’t tell by just saying two vs. four errors.

- Use anchors for clarity – this rubric notes that models are “self-explanatory” and that sentences have “good structure.” Do students really know what that means? Have they seen and discussed a self-explanatory model vs. one that is not self-explanatory? If there is space, an example of a model or sentence meeting the standard could be embedded write into the rubric in the appropriate column. If there isn’t a space, a rubric on a Google doc could link to those types of examples.