Within

this post, I am going to focus on formative assessment as an ongoing assessment

conducted as part of daily instruction to guide further instruction. They are

informal or formal checks of knowledge happening in conjunction with

instruction. I’m not considering more summative type assessments such as

end-of-unit tests or student projects. Though to be sure, all assessments

should be formative, in that the results will be used to guide decision-making

in relation to instruction.

Therefore,

formative assessments probably won’t be the primary tools that teachers and

administrators will look at when determining whether their school as a whole is

making progress toward their vision for science education. Instead, they are

tools that will influence daily decisions in the classroom, as well as student

and/or teacher collaborative conversations. Do I need to provide more time for peer

discussion around crafting a procedure for their investigation? Should I

include more scaffolding for students to create an effective data table? Notably,

they might inform elements reported on a standards-based report card, but more

often they will not.

In

a classroom using the NRC Framework and/or the Next Generation Science

Standards, educators aim to use three-dimensional instruction,

including within formative assessment. Two examples of what that could look

like in practice might help:

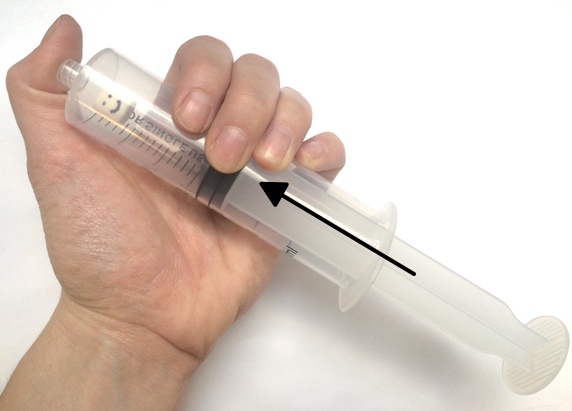

A

fifth grade teacher shows students a large syringe full of air. He asks them to

discuss with a neighbor what will happen if he plugs the end and pushes down.

Students then get to try it out at their table. After a couple minutes of students

investigating the phenomenon, the teacher asks them to model the phenomena by

drawing a diagram of it, prompting them to consider how to show things they can

and cannot see. Why does it get harder to push down? Students create the model

on their own first, then discuss their model with a peer, making revisions to

their models as desired and considering evidence. The teacher walks around

asking probing questions such as, “What are those little circles in your

syringe?” “Are they really that big?” “How do they look different before and

after pushing down on the syringe?” As he walks, he’s jotting down student

names and occasional notes along the continuum of the rubric, which he has on a

tablet or clipboard. Students then discuss their models in relation to the portion

of a modeling rubric focused on clearly representing all important aspects of

the phenomenon (not yet relationships among components). The teacher walks

around, looking and listening for important components of models, evidence, and

comments to share with the class to illustrate this aspect of modeling. He has

a couple pairs of students share theirs, highlighting key criteria from the

rubric where students appeared to be struggling. He also keeps his notes in a

file with similar notes about students’ abilities with the science and

engineering practices to look for progress over time and keep track of areas

that need more work.

Modeling

- Subskill

|

1

|

2

|

3

|

4

|

Identifying

important components of a scientific model

|

Student

represents the object or occurrence

|

Student

represents the object (etc.) with details (evidence) related to the

phenomenon

|

Students

models the phenomenon (etc.) in such a way that it adequately represents

important components of it (and not extraneous elements) and evidence gathered

|

Student

models the phenomenon, explains why those are the important components based

on evidence, and can analyze why components noted in one model better

represent the phenomenon than those in another model

|

In

This Example

|

Student

draws a syringe

|

Student

draws a pushed down syringe with packed little circles inside of it and label

of “gas”

|

Student

draws one syringe pulled back and another one pushed down, each has a magnification “bubble”

representing the scale of air particles and w/ them being closer together in

the pushed down image

|

Student

draws two syringes, as noted in 3, explains the importance of the scale and

the particles being closer together, and notes why a model depicting air

particles still far apart even with syringe down is more accurate (e.g. evidence

– can’t see the air)

|

As

a second example, a high school biology teacher asks students how many types of

organisms there are in the world, leaving the question intentionally vague.

Students discuss the answer in groups of three (no devices used at this point).

The teacher pushes for justification and evidence for quantitative responses,

as well as proper vocabulary. After a few minutes, she asks some student groups

to share their answers and evidence. And, then she asks, “Why is this diversity

of life important?” After sharing ideas with a partner, the whole class

discusses it for a few minutes. The teacher then describes an investigation the

class will do of “biodiversity” within their school grounds (as part of a

larger unit on ecosystems, invasive species, and adaptations). The class goes

on a quiet, mindful walk outside where students observe and come up with a testable question(s) about biodiversity

on their school grounds and/or in the local area. At the end of the walk, the

teacher collects the cards with students’ names and ideas on them. She quickly sorts

them into yellow—needs more work, or green—testable; she also jots down some

notes as to where students are at in general with this skill and adds it to her

assessment file. The next day she anonymously shares a few of her favorite

greens and yellows to illustrate key concepts in relation to testable questions,

eliciting student ideas first in that conversation.

These

formative assessments must be part of a larger cycle of guiding and reflecting

on student learning. I like the APEX^ST model described by Thompson, et al., in

NSTA’s

Nov 2009, Science Teacher:

- Educator teams

collaboratively define a vision of student learning.

- They teach and collect evidence of learning.

- They collaboratively analyze student work and other formative evidence of learning (such as conversations) to uncover trends and gaps.

- They reflect how opportunities to learn relate to evidence of student learning.

- They make changes, ask new questions, conduct another investigation, etc.

And,

then they reflect again on evidence of student learning in light of their vision,

considering changes to their vision and objectives as necessary.

One

goal here, even in formative assessment, is to be thinking about how student learning

fits into the overall picture of three dimensional instruction. Within the gas particle

modeling task above, the science/engineering practice (SEP) is modeling, the

disciplinary core idea (DCI) is 5-PS1.A—“gases are made from particles too

small to see,” and the crosscutting concepts (CCCs) are cause and effect and

scale. Within the biodiversity question task, the SEP is asking questions, the

DCI is HS-LS2.C—“ecosystem dynamics,” and the CCC is systems and system models

(though others could apply).

While

those 3D connections are being made overall, the specific, in-the-moment, formative assessment goals here do not

capture all three dimensions. In other words, the full task and work throughout

the unit will involve students in all dimensions, but this formative snippet is

really about one element of one practice in each case. Can students represent

important aspects of a phenomenon within a model? Can students generate a

testable question? The formative data gathered and acted on could also focus on

their understanding of biodiversity or the particle nature of matter (DCIs).

Or, it could focus on their ability to reason about the scale of a phenomenon

(CCC). But, in this case the teacher kept things simple and more manageable

with a specific, narrow goal in mind, which clearly related back to a larger

vision for student learning and objectives for this unit. Importantly, while

it’s a narrow goal, it’s still a deeper, conceptual learning goal. It’s not

just an exit card asking students to regurgitate a fact or plug numbers into a

formula.

Examples

of assessments (not necessarily exemplars, could be formative or summative)

- Next Generation Science Assessment – project w/ the Concord Consortium -

- NGSS-based sample classroom tasks - from Achieve

- Behavior of Air – Krajcik - (w/ several others in this chapter of the NRC report on assessment for the NGSS)

- Oregon Dept of Ed performance tasks

- Performance Assessment Resource Bank

- NECAP released items

Formative

assessment resources:

- The Informal Formative Assessment Cycle – from STEM Teaching Tools

- Designing an Assessment System - from STEM Teaching Tools

- How Teachers Can Develop Formative Assessments that Fit a Three-Dimensional View of Science Learning

- Examining Student Work (NSTA article) – by J. Thompson et al.,

- Research article - Flying Blind: An exploration of beginning science teachers’ enactment of formative assessment practices - by Erin Marie Furtak

- Literature review - Assessment for Learning (Formative Assessment)

Other

resources to add? Put them in the comments below!

Thanks for sharing some great ideas. I am trying something new this year with one of our AP Psych teachers. We are attempting to team-teach AP Bio and AP Psych in a 2 period block. We are now working on the big concept overlaps and developing units to bring both disciplines together. Not everything will connect perfectly, but we are excited to see where this goes. I am always on the lookout for the next big idea to challenge our students!

ReplyDeleteThanks,

Kevin Martin

Hartford Union HS